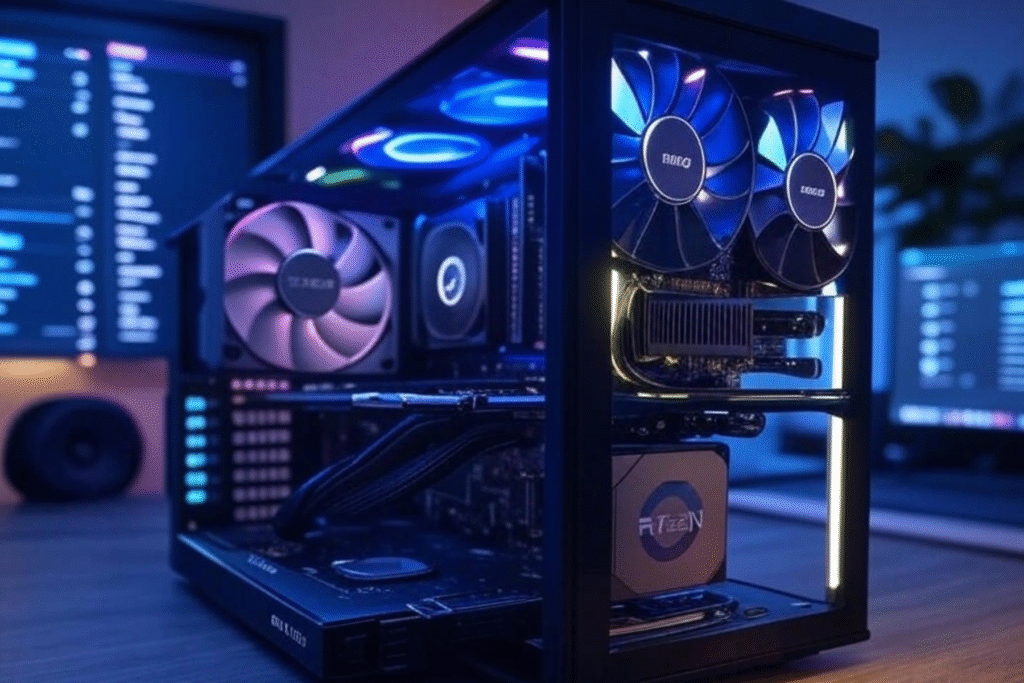

Hey there, AI enthusiasts! If you’re itching to dive into the world of open-source AI tools like DeepSeek R1 or Llama, you’re in for a treat. These tools let you tinker with powerful AI models right on your own computer—no cloud subscriptions needed. But here’s the catch: these models are hungry for computing power. I’ve been through the process of building a PC for AI work myself, and let me tell you, getting the right setup makes all the difference. In this guide, I’ll walk you through building a PC that can handle these tools, from basic must-haves to budget-friendly picks. Whether you’re a newbie or a seasoned coder, I’ve got you covered with practical tips and a personal touch.

Table of Contents

Why Build a PC for Open-Source AI?

Running AI models like DeepSeek R1 or Llama on your own machine is a game-changer. Here’s why I love it:

- Total Privacy: Keep your data local, no cloud snooping.

- Save Money: Skip those pricey cloud bills.

- Make It Yours: Tweak models to fit your projects.

- Work Anywhere: No internet? No problem.

But let’s be real—these models need serious horsepower. Think beefy GPUs, tons of RAM, and cooling that doesn’t quit. I learned this the hard way when my old laptop crashed trying to run a small Llama model! Let’s break down what you need to get it right.

What You Need to Run Open-Source AI Tools?

Before we start picking parts, let’s talk about the basics. Tools like DeepSeek R1 or Llama vary in size and complexity, so your PC needs depend on whether you’re just running pre-trained models (inference) or tweaking them (training). Here’s the lowdown based on my experience:

1. GPU (The Muscle)

- What It Does: GPUs crunch the massive math behind AI models.

- Bare Minimum: An NVIDIA GPU with 8GB VRAM, like the RTX 3060, for small models.

- My Pick: Go for 16GB+ VRAM (think RTX 3090 or 4090) if you’re tackling big models like Llama 70B. DeepSeek R1 loves NVIDIA’s CUDA, so stick with NVIDIA for now—AMD’s catching up, but it’s hit-or-miss.

2. CPU (The Brains)

- What It Does: Handles data prep and lighter tasks.

- Bare Minimum: A 6-core CPU, like the AMD Ryzen 5 5600X.

- My Pick: An 8-core CPU (e.g., Ryzen 7 7700X or Intel i7-13700K) keeps things snappy when you’re juggling tasks.

3. RAM (The Workspace)

- What It Does: Stores model data while you work.

- Bare Minimum: 16GB for small models.

- My Pick: 32GB or 64GB for big models. I once tried running Llama 13B with 16GB, and my PC begged for mercy!

4. Storage (The Library)

- What It Does: Holds models, datasets, and your OS.

- Bare Minimum: 512GB NVMe SSD.

- My Pick: 1TB SSD (like the Samsung 990 Pro) plus a 2TB HDD for datasets. Models can eat up space fast!

5. Power Supply (The Fuel)

- What It Does: Keeps everything running smoothly.

- Bare Minimum: 650W 80+ Gold PSU.

- My Pick: 850W or 1000W for future upgrades. Trust me, you don’t want your PC shutting down mid-training.

6. Cooling (The Chill Factor)

- What It Does: Stops your PC from turning into a toaster.

- Bare Minimum: Stock CPU cooler and a couple of case fans.

- My Pick: A 240mm liquid cooler and a high-airflow case. My GPU hit 85°C once—never again!

7. Operating System

My Pick: Ubuntu 22.04 LTS for its open-source vibe and CUDA support. Windows 11 works too, but I find Linux smoother for AI.

8. Motherboard (The Backbone)

- What It Does: Ties everything together.

- Bare Minimum: B550 (AMD) or B760 (Intel).

- My Pick: X670 (AMD) or Z790 (Intel) for extra PCIe lanes and upgrade room.

PC Builds for Every Budget

I’ve put together three builds based on my own tinkering and research. Prices are rough estimates (as of May 2025) and can vary, so shop around!

Budget Build (~$1,200)

Perfect for running smaller models like Llama 7B or a quantized DeepSeek R1.

- GPU: NVIDIA RTX 3060 12GB ($300)

- CPU: AMD Ryzen 5 5600X (6-core) ($150)

- RAM: 16GB DDR4 3200MHz ($50)

- Storage: 512GB NVMe SSD (Kingston NV2) ($40)

- Motherboard: MSI B550-A PRO ($100)

- PSU: 650W 80+ Gold (Corsair RM650x) ($80)

- Cooling: Stock cooler + 2 case fans ($30)

- Case: NZXT H510 ($70)

- OS: Ubuntu 22.04 LTS (Free)

Why I Like It: This build got me started with AI. It’s affordable and handles inference like a champ, but don’t expect to train big models.

Mid-Range Build (~$2,500)

Great for Llama 13B–70B inference and some light training.

- GPU: NVIDIA RTX 4070 Ti 12GB ($700)

- CPU: AMD Ryzen 7 7700X (8-core) ($300)

- RAM: 32GB DDR5 5200MHz ($120)

- Storage: 1TB NVMe SSD (Samsung 990 Pro) + 2TB HDD ($150)

- Motherboard: ASUS ROG Strix X670E-A ($250)

- PSU: 850W 80+ Gold (Seasonic Focus GX-850) ($120)

- Cooling: NZXT Kraken X63 280mm AIO + 4 case fans ($150)

- Case: Lian Li Lancool 205 Mesh ($100)

- OS: Ubuntu 22.04 LTS (Free)

Why I Like It: This is my current setup. It’s a sweet spot for most AI tasks and doesn’t break the bank.

High-End Build (~$5,000+)

Built for training and running massive models like Llama 70B in full precision.

- GPU: NVIDIA RTX 4090 24GB ($1,600)

- CPU: AMD Ryzen 9 7950X (16-core) ($550)

- RAM: 64GB DDR5 6000MHz ($250)

- Storage: 2TB NVMe SSD (WD Black SN850X) + 4TB HDD ($250)

- Motherboard: MSI MPG X670E Carbon WiFi ($350)

- PSU: 1000W 80+ Platinum (Corsair HX1000) ($200)

- Cooling: NZXT Kraken Z73 360mm AIO + 6 case fans ($250)

- Case: Fractal Design Meshify 2 ($150)

- OS: Ubuntu 22.04 LTS (Free)

Why I Like It: This is the dream rig for serious AI work. It’s overkill for most, but if you’re training models, it’s worth every penny.

Tip: Check out Amazon, Newegg, or Micro Center to search for best deals.

How to Build Your AI PC: My Step-by-Step Process

Building a PC can feel daunting, but it’s like assembling LEGO with a purpose. Here’s how I do it:

- Figure Out Your Needs

- Ask yourself: Am I just running models or training them? For example, DeepSeek R1 inference works on a mid-range GPU, but training needs more juice.

- Pick Your Parts

- Focus on GPU and RAM first. Double-check that your motherboard supports your CPU and has enough PCIe slots for your GPU.

- Put It Together

- What You’ll Need: A screwdriver, an anti-static wrist strap, and some patience.

- Steps:

- Pop the CPU into the motherboard socket and add the cooler.

- Slot in your RAM sticks.

- Screw the motherboard into the case.

- Plug the GPU into a PCIe slot.

- Connect the PSU cables to the motherboard, GPU, and drives.

- Add fans and hook up your cooling system.

Tip: Watch a YouTube build guide for your case.

4. Install the OS

- Grab Ubuntu 22.04 LTS or Windows 11 from their official sites.

- Use a tool like Rufus to make a bootable USB.

- Boot from the USB, install the OS, and update your NVIDIA drivers (critical for CUDA).

5. Set Up Your AI Tools

- Install Python, PyTorch, and the CUDA toolkit.

- Download model weights from Hugging Face or GitHub.

- Try quantization (e.g., with bitsandbytes) to squeeze big models onto smaller GPUs.

6. Test It Out

- Fire up a small model like Llama 7B to make sure everything’s working.

- Use nvidia-smi to check GPU usage. If it’s maxing out, you’re doing it right!

My Top Tips for a Smooth AI PC

- Quantize Models: Tools like bitsandbytes let you run Llama 70B on a 12GB GPU. It’s a lifesaver.

- Keep It Cool: My GPU used to overheat until I added extra case fans. Aim for <80°C.

- Save Power: Turn on eco modes when you’re not running AI tasks—my electric bill thanks me.

- Use Good Software: Hugging Face Transformers is my go-to for easy model setup.

Mistakes I Made (So You Don’t Have To)

- Skimping on VRAM: I thought 8GB would cut it for Llama 13B. Spoiler: It didn’t.

- Ignoring Cooling: My first build had one fan. Cue shutdowns mid-run.

- Wrong Parts: I once bought a motherboard that didn’t fit my CPU. Always check compatibility!

- Old Drivers: Outdated CUDA drivers broke PyTorch for me. Update everything.

Final Thoughts

Building a PC for open-source AI tools like DeepSeek R1 or Llama is like crafting your own superhero. It’s a bit of work, but the payoff—running cutting-edge AI on your terms—is so worth it. Whether you go budget or all-out, the right GPU, RAM, and cooling will set you up for success. I’m still tweaking my setup, and I’d love to hear about yours! Drop your budget or dream build in the comments, and let’s geek out together.

Frequently Asked Questions

What GPU is best for running Llama models?

The best GPU depends on the Llama model size. For Llama 7B or 13B with quantization, an NVIDIA RTX 3060 (12GB VRAM) works fine. For larger models like Llama 70B, go with an RTX 4090 (24GB VRAM) or higher. I’ve found NVIDIA’s CUDA support makes these GPUs a top choice for AI workloads.

Can I build a PC for AI under $1,000?

Yes, but with limitations! A budget build around $1,200 with an RTX 3060, Ryzen 5 5600X, and 16GB RAM can handle inference for smaller models like Llama 7B. Training or running bigger models will need more investment, so plan accordingly based on your needs.

Do I need a high-end CPU for open-source AI tools?

Not always. A 6-core CPU like the Ryzen 5 5600X is enough for inference, but for training or multitasking, an 8-core CPU like the Ryzen 7 7700X or Ryzen 9 7950X shines. I upgraded to an 8-core CPU, and it made a huge difference when preprocessing data!

Is Linux better than Windows for AI PCs?

Linux, especially Ubuntu 22.04 LTS, is my go-to for AI work due to its open-source compatibility and CUDA support. Windows 11 works too, but I’ve noticed smoother performance with AI frameworks like PyTorch on Linux. It’s a personal preference—try both if you’re new!

How much storage do I need for AI models?

Start with a 512GB SSD for the OS and a few models, but 1TB+ is better if you’re downloading large datasets or multiple models like DeepSeek R1 and Llama. I added a 2TB HDD for backups, and it’s been a lifesaver!

Can I use an AMD GPU for open-source AI tools?

AMD GPUs are improving with ROCm support, but compatibility with AI frameworks like PyTorch is still limited compared to NVIDIA’s CUDA. I’d stick with NVIDIA for now unless you’re comfortable troubleshooting AMD setups.

How do I keep my PC cool during AI tasks?

Good cooling is key! Use a 240mm+ liquid cooler for the CPU and ensure your case has high airflow with extra fans. My GPU hit 85°C once before I added more fans—keeping it under 80°C is my goal now!

Where can I buy parts for an AI PC build?

Check out Amazon, Newegg, or Micro Center for deals on GPUs like the RTX 4090 and CPUs like the Ryzen 9 7950X. I scored my parts during a sale on Newegg—compare prices and watch for discounts!

Pingback: Build a Gaming PC in 2025: Ultimate Guide from Budget to Beast